Trong bài trước chúng ta đã tìm hiểu cách cấu hình rclone với Dropbox.

Bài viết này sẽ giới thiệu cách cấu hình rclone kết nối với Amazon S3.

Nếu chưa cài đặt rclone, các bạn có thể tham khảo thêm bài hướng dẫn cài đặt rclone nhé.

Bắt đầu với lệnh sau:

rclone configNhập n (New remote) để tạo mới kết nối.

Dòng name chúng ta sẽ đặt tên cho kết nối này là amazons3.

Tiếp tục nhập 4 để chọn dịch vụ lưu trữ đám mây S3.

Nhập 1 để chọn nhà cung cấp Amazon Web Services (AWS) S3.

Bước tiếp theo, chọn phương thức lấy thông tin truy cập dịch vụ S3, nhập 1.

Sau đó nhập access_key_id và secret_access_key của tài khoản dịch vụ AWS S3.

Chọn region phù hợp với bạn, trong ví dụ này tôi sẽ chọn Asia Pacific (Singapore) Region nên sẽ nhập 10.

Sau đó, chương trình yêu cầu thiết lập phân quyền, tôi sẽ chọn đầy đủ quyền bằng cách nhập 1.

Lựa chọn thuật toán mã hóa phía server để lưu trữ các đối tượng trong S3, tôi sẽ để trống và Enter.

Để trống mục server_side_encryption và storage class rồi Enter đến bước tiếp theo.

Cuối cùng, chương trình hỏi thiết lập cấu hình nâng cao và xác nhận các thông tin đã nhập phía trên, ta có thể nhập các giá trị mặc định bằng cách Enter.

Output quá trình cấu hình như sau:

phuongdm@Phuongdm-PC:~$ rclone config

Current remotes:

Name Type

==== ====

dropbox dropbox

ftp ftp

ggdrive drive

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> n

name> amazons3

Type of storage to configure.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / 1Fichier

\ "fichier"

2 / Alias for an existing remote

\ "alias"

3 / Amazon Drive

\ "amazon cloud drive"

4 / Amazon S3 Compliant Storage Provider (AWS, Alibaba, Ceph, Digital Ocean, Dreamhost, IBM COS, Minio, Tencent COS, etc)

\ "s3"

5 / Backblaze B2

\ "b2"

6 / Box

\ "box"

7 / Cache a remote

\ "cache"

8 / Citrix Sharefile

\ "sharefile"

9 / Dropbox

\ "dropbox"

10 / Encrypt/Decrypt a remote

\ "crypt"

11 / FTP Connection

\ "ftp"

12 / Google Cloud Storage (this is not Google Drive)

\ "google cloud storage"

13 / Google Drive

\ "drive"

14 / Google Photos

\ "google photos"

15 / Hubic

\ "hubic"

16 / In memory object storage system.

\ "memory"

17 / Jottacloud

\ "jottacloud"

18 / Koofr

\ "koofr"

19 / Local Disk

\ "local"

20 / Mail.ru Cloud

\ "mailru"

21 / Mega

\ "mega"

22 / Microsoft Azure Blob Storage

\ "azureblob"

23 / Microsoft OneDrive

\ "onedrive"

24 / OpenDrive

\ "opendrive"

25 / OpenStack Swift (Rackspace Cloud Files, Memset Memstore, OVH)

\ "swift"

26 / Pcloud

\ "pcloud"

27 / Put.io

\ "putio"

28 / QingCloud Object Storage

\ "qingstor"

29 / SSH/SFTP Connection

\ "sftp"

30 / Sugarsync

\ "sugarsync"

31 / Tardigrade Decentralized Cloud Storage

\ "tardigrade"

32 / Transparently chunk/split large files

\ "chunker"

33 / Union merges the contents of several upstream fs

\ "union"

34 / Webdav

\ "webdav"

35 / Yandex Disk

\ "yandex"

36 / http Connection

\ "http"

37 / premiumize.me

\ "premiumizeme"

38 / seafile

\ "seafile"

Storage> 4

** See help for s3 backend at: https://rclone.org/s3/ **

Choose your S3 provider.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Amazon Web Services (AWS) S3

\ "AWS"

2 / Alibaba Cloud Object Storage System (OSS) formerly Aliyun

\ "Alibaba"

3 / Ceph Object Storage

\ "Ceph"

4 / Digital Ocean Spaces

\ "DigitalOcean"

5 / Dreamhost DreamObjects

\ "Dreamhost"

6 / IBM COS S3

\ "IBMCOS"

7 / Minio Object Storage

\ "Minio"

8 / Netease Object Storage (NOS)

\ "Netease"

9 / Scaleway Object Storage

\ "Scaleway"

10 / StackPath Object Storage

\ "StackPath"

11 / Tencent Cloud Object Storage (COS)

\ "TencentCOS"

12 / Wasabi Object Storage

\ "Wasabi"

13 / Any other S3 compatible provider

\ "Other"

provider> 1

Get AWS credentials from runtime (environment variables or EC2/ECS meta data if no env vars).

Only applies if access_key_id and secret_access_key is blank.

Enter a boolean value (true or false). Press Enter for the default ("false").

Choose a number from below, or type in your own value

1 / Enter AWS credentials in the next step

\ "false"

2 / Get AWS credentials from the environment (env vars or IAM)

\ "true"

env_auth> 1

AWS Access Key ID.

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

access_key_id> AKIAZTN7HVQAQLXBK2EN

AWS Secret Access Key (password)

Leave blank for anonymous access or runtime credentials.

Enter a string value. Press Enter for the default ("").

secret_access_key> TNDNvoPqpLpN/O+JLvTvhKDC0JqdF4nxEVL0+cgq

Region to connect to.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

/ The default endpoint - a good choice if you are unsure.

1 | US Region, Northern Virginia or Pacific Northwest.

| Leave location constraint empty.

\ "us-east-1"

/ US East (Ohio) Region

2 | Needs location constraint us-east-2.

\ "us-east-2"

/ US West (Oregon) Region

3 | Needs location constraint us-west-2.

\ "us-west-2"

/ US West (Northern California) Region

4 | Needs location constraint us-west-1.

\ "us-west-1"

/ Canada (Central) Region

5 | Needs location constraint ca-central-1.

\ "ca-central-1"

/ EU (Ireland) Region

6 | Needs location constraint EU or eu-west-1.

\ "eu-west-1"

/ EU (London) Region

7 | Needs location constraint eu-west-2.

\ "eu-west-2"

/ EU (Stockholm) Region

8 | Needs location constraint eu-north-1.

\ "eu-north-1"

/ EU (Frankfurt) Region

9 | Needs location constraint eu-central-1.

\ "eu-central-1"

/ Asia Pacific (Singapore) Region

10 | Needs location constraint ap-southeast-1.

\ "ap-southeast-1"

/ Asia Pacific (Sydney) Region

11 | Needs location constraint ap-southeast-2.

\ "ap-southeast-2"

/ Asia Pacific (Tokyo) Region

12 | Needs location constraint ap-northeast-1.

\ "ap-northeast-1"

/ Asia Pacific (Seoul)

13 | Needs location constraint ap-northeast-2.

\ "ap-northeast-2"

/ Asia Pacific (Mumbai)

14 | Needs location constraint ap-south-1.

\ "ap-south-1"

/ Asia Patific (Hong Kong) Region

15 | Needs location constraint ap-east-1.

\ "ap-east-1"

/ South America (Sao Paulo) Region

16 | Needs location constraint sa-east-1.

\ "sa-east-1"

region> 10

Endpoint for S3 API.

Leave blank if using AWS to use the default endpoint for the region.

Enter a string value. Press Enter for the default ("").

endpoint>

Location constraint - must be set to match the Region.

Used when creating buckets only.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Empty for US Region, Northern Virginia or Pacific Northwest.

\ ""

2 / US East (Ohio) Region.

\ "us-east-2"

3 / US West (Oregon) Region.

\ "us-west-2"

4 / US West (Northern California) Region.

\ "us-west-1"

5 / Canada (Central) Region.

\ "ca-central-1"

6 / EU (Ireland) Region.

\ "eu-west-1"

7 / EU (London) Region.

\ "eu-west-2"

8 / EU (Stockholm) Region.

\ "eu-north-1"

9 / EU Region.

\ "EU"

10 / Asia Pacific (Singapore) Region.

\ "ap-southeast-1"

11 / Asia Pacific (Sydney) Region.

\ "ap-southeast-2"

12 / Asia Pacific (Tokyo) Region.

\ "ap-northeast-1"

13 / Asia Pacific (Seoul)

\ "ap-northeast-2"

14 / Asia Pacific (Mumbai)

\ "ap-south-1"

15 / Asia Pacific (Hong Kong)

\ "ap-east-1"

16 / South America (Sao Paulo) Region.

\ "sa-east-1"

location_constraint> 10

Canned ACL used when creating buckets and storing or copying objects.

This ACL is used for creating objects and if bucket_acl isn't set, for creating buckets too.

For more info visit https://docs.aws.amazon.com/AmazonS3/latest/dev/acl-overview.html#canned-acl

Note that this ACL is applied when server side copying objects as S3

doesn't copy the ACL from the source but rather writes a fresh one.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Owner gets FULL_CONTROL. No one else has access rights (default).

\ "private"

2 / Owner gets FULL_CONTROL. The AllUsers group gets READ access.

\ "public-read"

/ Owner gets FULL_CONTROL. The AllUsers group gets READ and WRITE access.

3 | Granting this on a bucket is generally not recommended.

\ "public-read-write"

4 / Owner gets FULL_CONTROL. The AuthenticatedUsers group gets READ access.

\ "authenticated-read"

/ Object owner gets FULL_CONTROL. Bucket owner gets READ access.

5 | If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ "bucket-owner-read"

/ Both the object owner and the bucket owner get FULL_CONTROL over the object.

6 | If you specify this canned ACL when creating a bucket, Amazon S3 ignores it.

\ "bucket-owner-full-control"

acl> 1

The server-side encryption algorithm used when storing this object in S3.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / None

\ ""

2 / AES256

\ "AES256"

3 / aws:kms

\ "aws:kms"

server_side_encryption>

If using KMS ID you must provide the ARN of Key.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / None

\ ""

2 / arn:aws:kms:*

\ "arn:aws:kms:us-east-1:*"

sse_kms_key_id>

The storage class to use when storing new objects in S3.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Default

\ ""

2 / Standard storage class

\ "STANDARD"

3 / Reduced redundancy storage class

\ "REDUCED_REDUNDANCY"

4 / Standard Infrequent Access storage class

\ "STANDARD_IA"

5 / One Zone Infrequent Access storage class

\ "ONEZONE_IA"

6 / Glacier storage class

\ "GLACIER"

7 / Glacier Deep Archive storage class

\ "DEEP_ARCHIVE"

8 / Intelligent-Tiering storage class

\ "INTELLIGENT_TIERING"

storage_class>

Edit advanced config? (y/n)

y) Yes

n) No (default)

y/n>

Remote config

--------------------

[amazons3]

type = s3

provider = AWS

env_auth = false

access_key_id = XXXXX

secret_access_key = XXXXX

region = ap-southeast-1

location_constraint = ap-southeast-1

acl = private

--------------------

y) Yes this is OK (default)

e) Edit this remote

d) Delete this remote

y/e/d>

Current remotes:

Name Type

==== ====

amazons3 s3

dropbox dropbox

ftp ftp

ggdrive drive

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> qVậy là chúng ta đã thiết lập xong cấu hình rclone với AWS S3.

Để kiểm tra, chúng ta có thể sử dụng lệnh sau:

phuongdm@Phuongdm-PC:~$ rclone copy Downloads/backup.png amazons3:phuongdm

phuongdm@Phuongdm-PC:~$ rclone lsd amazons3:

-1 2020-10-27 11:26:20 -1 phuongdm

phuongdm@Phuongdm-PC:~$ rclone ls amazons3:

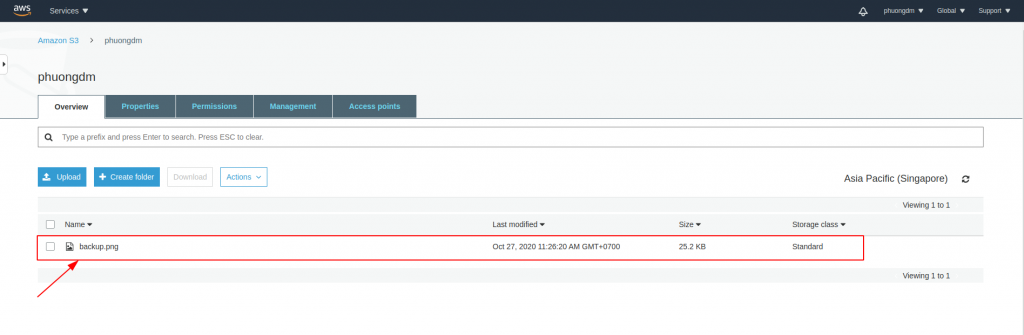

25834 phuongdm/backup.pngKiểm tra lại trên trang quản trị của S3 sẽ thấy bucket phuongdm được tạo (nếu chưa tồn tại) và file ảnh backup.png được copy từ server đến bucket phuongdm:

Một số bài viết liên quan:

Leave a Reply